Translated Article: EU-US Summit Against Secure Encryption

Gipfel EU-USA gegen sichere Verschlüsselung by Erich Moechel for fm4.ORF.at

The agenda of the virtual meeting at a high-ranking official level in two weeks features pretty much all data protection-related topics that are currently controversial in Europe.

Joe Biden’s appearance before the EU Council of Ministers will be followed by a two-day video conference on April 14th at the top level of officials in the field of justice and homeland security between the EU and the USA. Practically all currently controversial issues around data protection are on the agenda, from cross-border data access for law enforcement officers to joint action against secure encryption.

This is also the case with the “fight against child abuse”, which is once again being instrumentalized for these general surveillance projects. Ylyva Johansson, EU Commissioner for Home Affairs and Justice, commissioned a consultation that ends on April 15th. It is peppered with leading questions, among the options are both the criminalization of encryption per se and the monitoring of all Internet communication.

Transatlantic front against E2E encryption

On the transatlantic conference agenda, the items “Responsibility for online platforms” and “Challenges with encryption and law enforcement” are hidden in the back third. The Portuguese Council Presidency will then present the highly controversial Council resolution “Security through encryption, security despite encryption” from November. This decision is nothing new for the US side because they had already passed an almost identical decision in October as part of the “Five Eyes” espionage alliance under the heading “End-to-end encryption and public security”.

And the concept called “Exceptional Access”, which a high-ranking GCHQ technician named Ian Levy introduced already in 2018 also comes from this alliance. Essentially, it boils down to the fact that services such as secure end-to-end encryption will no longer be possible between two or more participants because the providers are obliged to create duplicate keys. In addition, there must be a master key and some form of retention of the content, since chats, for example, are not stored permanently.

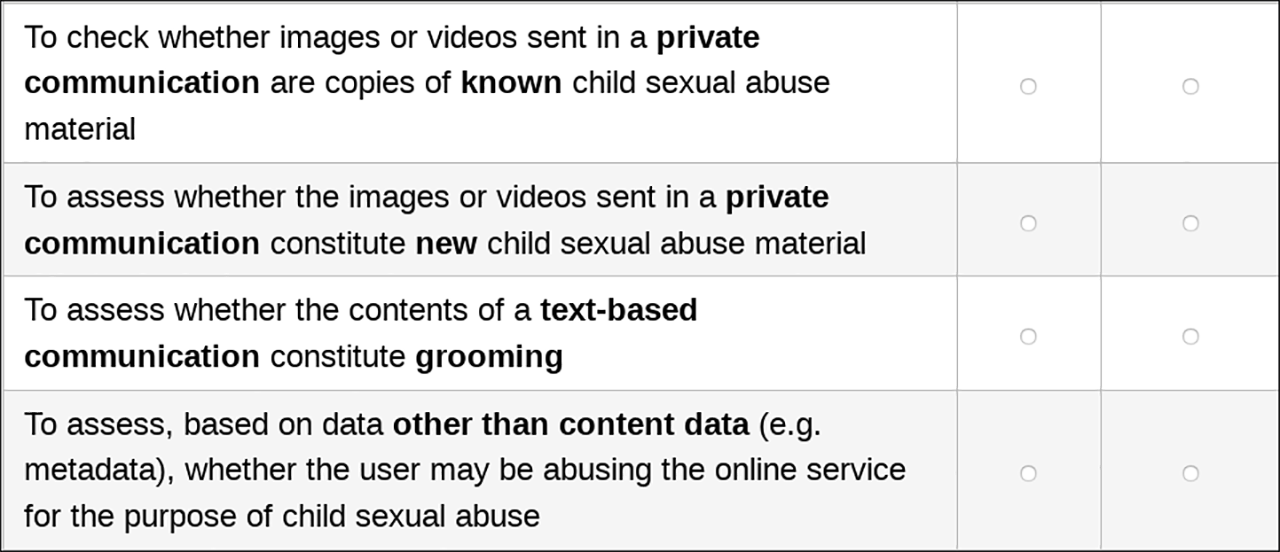

Here the Commission’s consultation asks what kind of communication is to be searched for what. Mind you, this is about private chats between individuals or small groups. It is noteworthy that the consultation also considers automatically reading in and analyzing texts from private chats.

The Commissioner’s leading questions

The Commissioner’s consultation contains pretty much all of the items that will come up at the civil servants’ summit in relation to the crackdown on encryption. As can be seen from the screenshot (above), the consultation does not linger long on irrelevant matters such as the fundamental rights of citizens, but already comes to the point at question number five, about what the main purpose of this communication to protect against child abuse is. “Some of the tools providers use to voluntarily detect, report and delete sexual abuse in their services do not work in encrypted environments. If there was a legal obligation to detect, report and delete abuse … should that obligation also apply to encrypted communications? “

The fact is that there is currently no such obligation for providers in the USA or in the EU. It is also a fact that the European Court of Justice – starting with data retention – has rejected any such unreasonable mass surveillance measure as violating fundamental rights. The consultation becomes even clearer with question number six: “If yes, what form should this legal obligation take? The only yes / no answer is:” Important providers of encrypted online services should be obliged to maintain technical capacities in order to proactively discover, report and delete child abuse”.

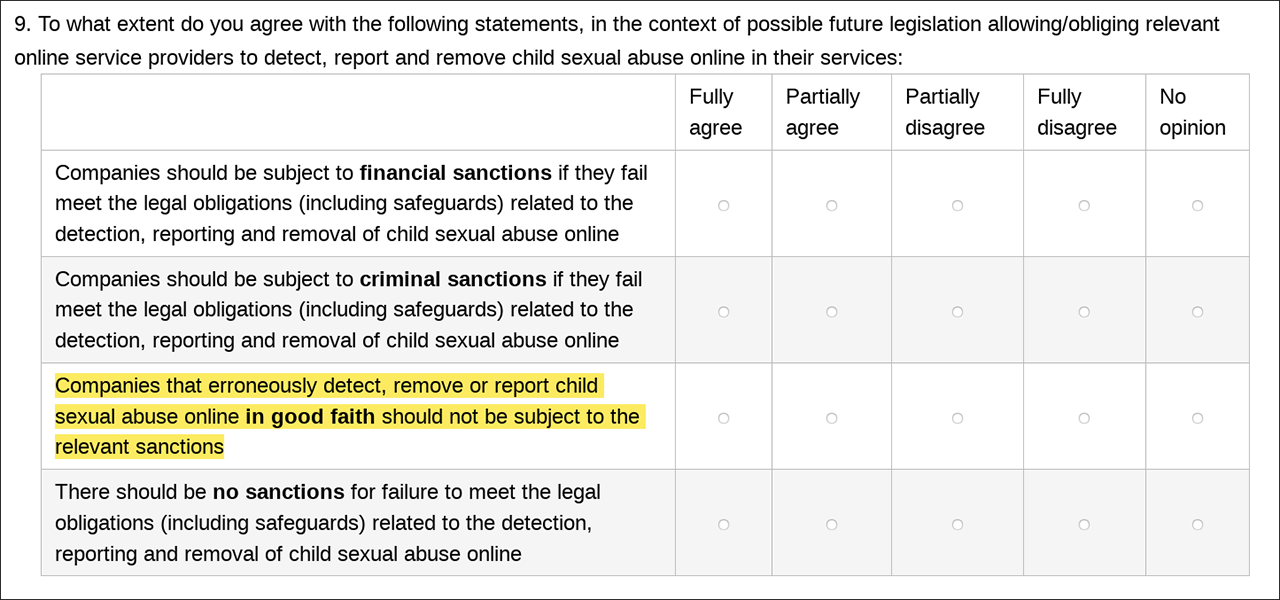

In question nine, it must have dawned on the Commission’s questioners that such a totalitarian surveillance regime inevitably has to go hand in hand with significant error rates. This clearly raises the question of liability for the industry.

Private chats, heuristics, mandatory reporting

In the planned monitoring regime, there is also a reporting obligation and that is where the problems begin, especially since algorithms, not people do the monitoring. As can be seen from the screenshot, the commission asks whether a search should be made only for known child abuse images in private (!) chats, or also for new, as yet unknown images. Known pictures mean that only a digital watermark of the picture or video needs to be created and matched with the watermarks in a database. Here, too, there are error rates of a few percent.

If, however, heuristic methods, for example in the form of “artificial intelligence”, are used to calculate the degree of probability that these are images of child abuse based on skin tones, colour distribution and body size, the wrong hits in particular rise considerably. So far, there are no reliable numbers for this offence, but ten to fifteen percent false hits are already considered to be a pretty good value for similar AI projects. The consequence is that such “hits” of the algorithms have to be checked by humans.

Who gets hit by wrong hits?

With WhatsApp alone, such a regulation would result in millions of images worldwide every day, which would have to be checked by people either at the operator or at the reporting office. In other words, they would be busy looking through vacation photos of families with children, which are sent privately to grandparents and other relatives via WhatsApp. Then there are the teenagers who send each other lascivious selfies, as is customary with sexting among young people. These are by far the largest groups of users of this chat application who will be affected by false hits if their communication secrecy is broken by algorithms.